What is AI Human Impact?

AI-intensive companies surveyed in human and ethical terms for investment purposes.

Purposes

Map AI technologies ethically so that investors can act in accord with their own values: AI Human Impact expands investor freedom.

Catalyze more and faster artificial intelligence innovation in three ways. First, AI Human Impact illuminates scenes of potential social rejection including unfair outcomes resulting from biased training data. More significantly, irresolvable dilemmas are delineated so that they can be capably navigated, such as the facial recognition trade-off between the right to privacy and the desire for personalized services. Finally, the strategy identifies engineering that expands human capabilities and potential instead of narcotizing users in dark patterns, and so orients AI development toward sustainable gains, and subsequent risk-adjusted returns.

AI Human Impact: ESG for the Big Data Economy

AI Human Impact and Environmental Social Governance are both humanist investment strategies investigating nonfinancial criteria, but AIHI updates for, and focuses on data-driven enterprises.

Premise: ESG criteria are well suited to traditional companies in the industrial economy, but not AI-intensive companies in the digital economy: navigating the unprecedented economic powers, social effects, and ethical dilemmas rising along with AI requires novel categories for evaluation.

2 representative differences between AIHI and ESG

Environment

Environmental effects are the topline concern for ESG investors, but AI companies don’t typically produce toxic waste on an industrial scale, strip-mine the earth, or clear-cut forests. Environmental ratings connect only tenuously to facial recognition technology, to healthcare algorithms filtering electrocardiograms for abnormalities, to romance websites promising partners.

Privacy

Industrial organizations have little interest in consumers’ personally identifying information: Ford Motor Company initially promised customers they could have any color they wished, as long as it was black. Homogenization was the key to industrial economy success, and ethical dilemmas – along with ESG responses – followed in kind. Contrastingly, AI runs on personalization. Netflix does not aspire to produce generic movie recommendations for homogenized demographic groups, it aims specific possibilities toward individual viewers at targeted moments. The burgeoning field of dynamic insurance does not cover population segments over extended durations, it customizes for unique clients and intervenes at critical junctures. AI healthcare is less concerned with a patient's age group than with tiny and personal heartbeat abnormalities that escape human eyes but not machine learned analysis.

In the industrial, ESG economy, personally identifying and distinguishing information is ignored, even suppressed. In the AI economy it is obsessively gathered and leveraged. Because everything is about individuals and their unique data, the human impact of privacy decisions goes from being an afterthought to a primary concern.

Key differences

AIHI applies only to companies functioning with AI at the core of their operation.

ESG applies broadly across the economy.

AIHI accounts for humanity in the digital world.

ESG accounts most effectively for humanity in the industrial world.

AIHI categories of evaluation derive from academic AI ethics.

ESG categories of evaluation derive from UN Sustainable Development Goals.

Short White Paper: Why Traditional ESG Does Not Work for AI

AI Human Impact Example:

Insurance & the Principle of Autonomy

Continuous collection and analysis of behavioural data allows individual and dynamic risk assessment and the establishment of a continuous feedback loop to customers, with no or limited human intervention. Such digital monitoring not only enhances the quality of risk assessments but can also provide real-time insights to policyholders on their risk behaviour and individual incentives for risk reduction.

Such powerful new business models are already being launched or are clearly visible on the horizon. They include genuine peer-to-peer concepts (such as Bought by Many) and fully digital insurers (such as Oscar, InShared, Haven Life or Sherpa, for example). Ultimately, they will enhance the role of insurance from pure risk protection towards “predicting and preventing.”

- Geneva Association for the Study of Insurance Economics, Switzerland

As promised by the Geneva Association, AI insurance will intervene to prevent risk in real time. This means a skier standing atop a double black diamond run may wrestle with her vitality and her fear as she decides whether to descend, and in the midst of the uncertainty receive a text message reporting that her health insurance premiums will rise if she goes for the thrill.

The promise of dynamic insurance is options customized for you, at any particular moment. One ethical knot for AI Human Impact concerns autonomy: On one hand, this AI-powered insurance increases self-determination by providing clients more control over their policies. On the other, the reason we have health insurance in the first place is so that we can take risks, like skiing the double black diamond run, and it's easier to do that when providers don’t know what policy holders are doing.

So, does dynamic AI insurance increase autonomy, or suffocate it? Answering questions like these is the labor of AI Human Impact.

AI Human Impact Example:

OkCupid & the Principle of Dignity

OkCupid doesn’t really know what it’s doing. Neither does any other website. It’s not like people have been building these things for very long, or you can go look up a blueprint or something. Most ideas are bad. Even good ideas could be better. Experiments are how you sort all this out.

We noticed that people didn’t like it when Facebook “experimented” with their news feed. But guess what, everybody: if you use the Internet, you’re the subject of hundreds of experiments at any given time, on every site. That’s how websites work.

- Chris Rudder, OKCupid Blog

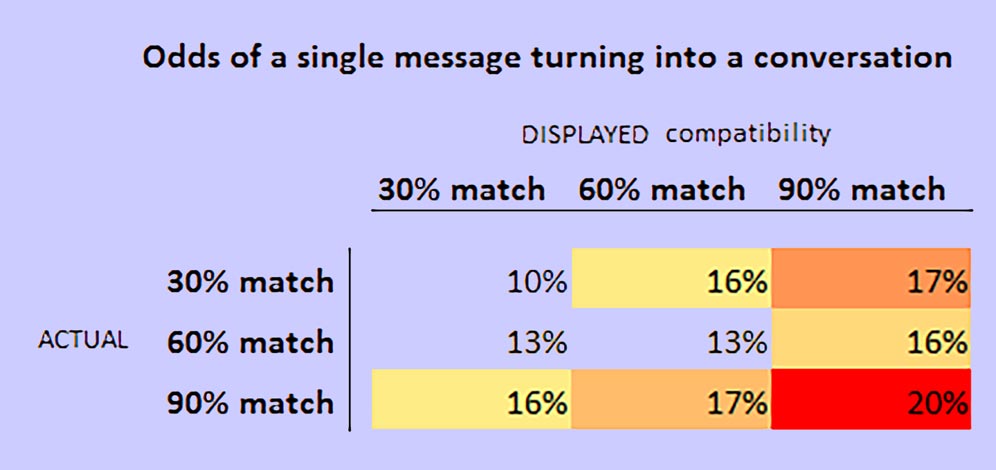

In one of those experiments, users who the OkCupid algorithms determined to be incompatible were told the opposite. When they connected, their interaction was charted by the platform’s standard metrics: how many times did they message each other, with how many words, over how long a period, and so on. Then their relationship success was compared against pairs who were judged truly compatible. Presumably, the test measured the power of positive suggestion: Do incompatible users who are told they are compatible relate with the same success as true compatibles?

The answer is not as interesting as the users’ responses. One asked, “What if the manipulation is used for what you believe does good for the person?”

Human dignity is idea that individuals and their projects are valuable in themselves, and merely not as instruments or tools in the projects of others. Within AI Human Impact, the question dignity poses to OKCupid is: Are the romance-seekers being manipulated in experiments for their ultimate benefit because the learnings will result in a better platform and higher likelihood of romance? Or, is the manipulation about the platform’s owners and their marginally perverse curiosities?

The question’s answer is a step toward a dignity score for the company and, by extension, its parent: Match Group (Match, Tinder, Hinge …)

AI Human Impact Example:

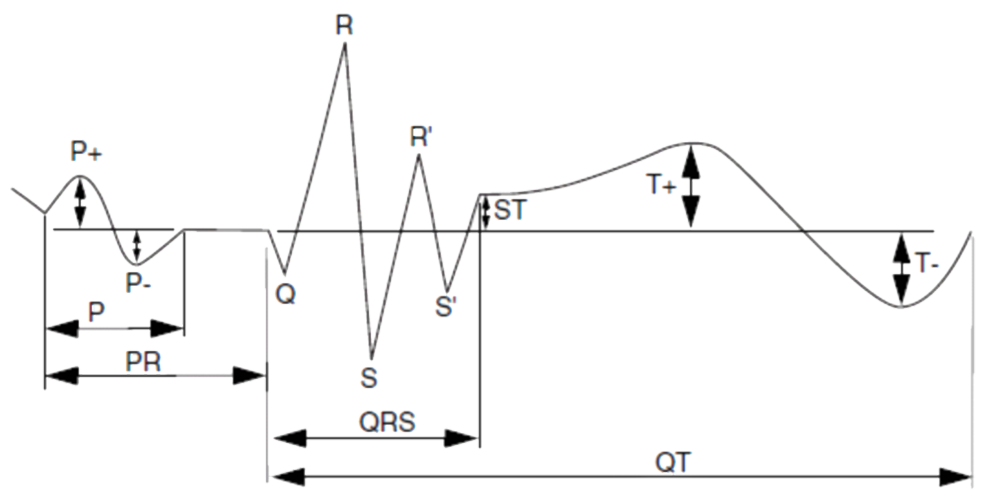

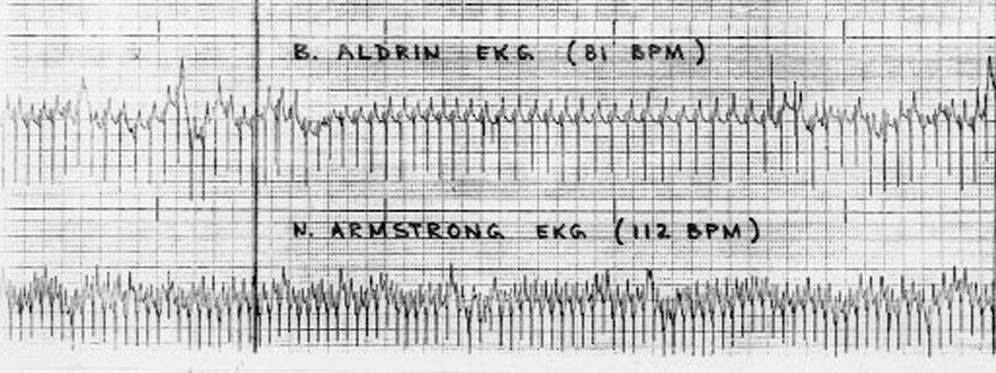

[Redacted] & the Principle of Solidarity

In 2020, an international team of philosophers, data scientists, and medical doctors performed a robust ethics evaluation on an existing, deployed, and functioning AI medical device. The team included James Brusseau who directs AI Human Impact. A nondisclosure agreement with the AI medical device manufacturer will be honored here, but in broad terms the AI is a proprietary algorithm that analyzes electrocardiograms for patterns associated with impending coronary disease. The task is ideal for artificial intelligence, which is knowledge produced by pattern recognition in large datasets, like the many measurements attributable to a heartbeat, multiplied by a long string of beats. Conveniently, efficiently, and inexpensively, the machine filters for tiny deviations and so promises to improve the quality of patients’ lives by discerning the probability of impending heart disease.

In AI medicine, because the biology of genders and races differ, there arises the risk that a diagnostic or treatment may function well for some groups while failing for others. As it happened, the machine centering our evaluation was trained on data which was limited geographically, and not always labeled in terms of gender or race. Because of the geography, it seemed possible that some races may have been over- or underrepresented in the training data.

A solidarity concern arises here. In ethics, solidarity is the social inclusiveness imperative that no one be left behind. Applied to the AI medical device, the solidarity question is: should patients from the overrepresented race(s) wait to use the technology until other races have been verified as fully included in the training data? A strict solidarity posture could respond affirmatively, while a flexible solidarity would allow use to begin so long as data gathering for underrepresented groups also initiated. Limited or absent solidarity would be indicated by neglect of potential users, possibly because a cost/benefit analysis returned a negative result, meaning some people get left behind because it is not worth the expense of training the machine for their narrow or outlying demographic segment.

In AI Human Impact, a positive solidarity score would be assigned to the company were it to move aggressively toward ensuring an inclusive training dataset.

AI Human Impact Example:

Lemonade & the Principle of Fairness

Daniel Schreiber, the co-founder and CEO of the New York-based insurance startup Lemonade shares concerns that the increased use of machine-learning algorithms, if mishandled, could lead to “digital redlining,” as some consumer and privacy right advocates fear.

To ensure that an AI-led underwriting process is fair, Schreiber promotes the use of a "uniform loss ratio." If a company is engaging in fair underwriting practices, its loss ratio, or the amount it pays out in claims divided by the amount it collects in premiums, should be constant across race, gender, sexual orientation, religion and ethnicity.

What counts as fairness? According to the original Aristotelian dictate, it is treating equals equally, and unequals unequally.

The rule can be applied to individuals, and to groups. For individuals, fairness means clients who present unequal risk should receive unequal treatment: whether the product is car, home, or health insurance, the less likely a customer is to make a claim, the lower the premium that ought to be charged.

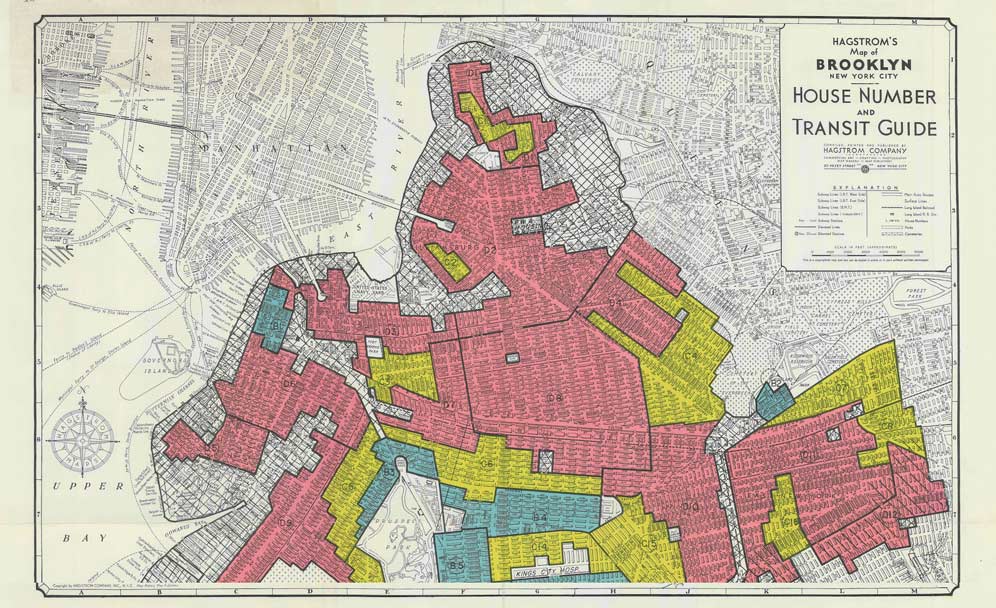

In Brooklyn New York, individual fairness starts with statistical reality: a condominium owner in a doorman building in the ritzy and well-policed Brooklyn Heights neighborhood (home to Matt Damon, Daniel Craig, Rachel Weisz…) is less likely to be robbed than a ground-floor apartment in the gritty Bedford-Stuyvesant neighborhood. That risk differential justifies charging the Brooklyn Heights owner less to insure more valuable possessions than the workingman out in Bed-Stuy.

What the startup insurance company Lemonade noticed – and they are not the only ones – is that premium disparities in Brooklyn neighborhoods tended to correspond with racial differences. The company doesn’t disclose its data, but the implication from the article published in Fortune is that being fair to individuals leads to apparent unfairness when the customers are grouped by race. Blacks who largely populate Bed-Stuy can reasonably argue that they’re getting ripped-off since they pay relatively more than Brooklyn Heights whites for coverage.

Of course, insurance disparities measured across groups are common. Younger people pay more than older ones, and men more than women for the same auto insurance. What is changing, however, is that the hyper-personalization of AI analysis, combined with big data filtering that surfaces patterns in the treatment of racial, gender, and other identity groups, is multiplying and widening the gaps between individual and group equality. More and more, being fair to each individual is creating visible inequalities on the group level.

What Lemonade proposed to confront this problem is an AI-enabled shift from individual to group fairness in terms of loss ratios (the claims paid by the insurer divided by premiums received). Instead of applying the same ratio to all clients, it would be calibrated for equality across races, genders, and similarly protected demographics. The loss ratio used to calculate premiums for all whites would be the same one applied to other races. Instead of individuals, now it is identity groups that will be charged higher or lower premiums depending on whether they are more or less likely to make claims. (Note: Individuals may still be priced individually, but under the constraint that their loss ratio averaged with all others in their demographic equals that of other demographics.)

This is good for Lemonade because it allows them to maintain that they treat racial and gender groups equally since their quoted insurance prices all derive from a single loss ratio applied equally to the groups. It is also good for whites who live in Bud-Stuy because they now get incorporated into the rates set for Matt Damon and the rest inhabiting well protected and policed neighborhoods. Of course, where there are winners, there are also losers.

More broadly, here is an incomplete set of fairness applications:

-

Rates can be set individually to correspond with risk that a claim will be made: every individual is priced by the same loss ratio, and so pays an individualized insurance premium, with a lower rate reflecting lower risk.

-

Rates can be set for groups to correspond with total claims predicted to be made by its collected members: demographic segments are priced by the same loss ratio. But, different degrees of risk corresponding with the diverse groups results in the payments of diverse premiums for the same coverage. This occurs today in car insurance where young males pay higher premiums than young females because they have more accidents. The fairness claim is that even though the two groups are being treated differently is terms of premiums, they are treated the same in terms of the profit they generate for the insurer.

-

Going to the other extreme, rates can be set at a single level across the entire population: everyone pays the same price for the same insurance regardless of their circumstances and behaviors, which means that every individual incarnates a personalized loss ratio.

It is difficult to prove one fairness application preferable to another, but there is a difference between better and worse understandings of the fairness dilemma. AI Human Impact scores fairness in terms of that understanding – in terms of how deeply a company engages with the dilemma – and not in terms of adherence to one or another definition.

AI Human Impact Example:

Tesla & the Principle of Safety

The Model S was on a divided highway with Autopilot engaged when a tractor trailer drove across the highway perpendicular to the car. Neither Autopilot nor the driver noticed the white side of the tractor trailer against a brightly lit sky, so the brake was not applied. The high ride height of the trailer combined with its positioning across the road and the extremely rare circumstances of the impact caused the Model S to pass under the trailer, with the bottom of the trailer impacting the windshield of the Model S.

This is the first known fatality in just over 130 million miles where Autopilot was activated. Among all vehicles in the US, there is a fatality every 94 million miles. Worldwide, there is a fatality approximately every 60 million miles.

The customer who died in this crash had a loving family and we are beyond saddened by their loss. He was a friend to Tesla and the broader EV community, a person who spent his life focused on innovation and the promise of technology and who believed strongly in Tesla’s mission. We would like to extend our deepest sympathies to his family and friends.

- Tesla Team, A Tragic Loss, Tesla Blog

This horror movie accident represents a particular AI fear: a machine capable of calculating pi to 31 trillion digits cannot figure out to stop when a truck crosses in front. The power juxtaposed with the debility seems ludicrous, as though the machine completely lacks common sense which, in fact, is precisely what it does lack.

For human users, one vertiginous effect of the debility is no safe moment. As with any mechanism, AIs come with knowable risks and dangers that can be weighed, compared, accepted or rejected, but it is beyond that, in the region of unknowable risks – especially those that strike as suddenly as they are unexpected – that trust in AI destabilizes. So, any car accident is worrisome, but this is terrifying: a report from the European Parliament found that the Tesla AI mistook the white tractor trailer crossing in front for the sky, and did not even slow down.

So, how is safety calculated in human terms? As miles driven without accidents? That’s the measure Tesla proposed, but it doesn’t account for the unorthodox perils of AI. Accounting for them is the task of an AI ethics evaluation.

AI Ethics Principles

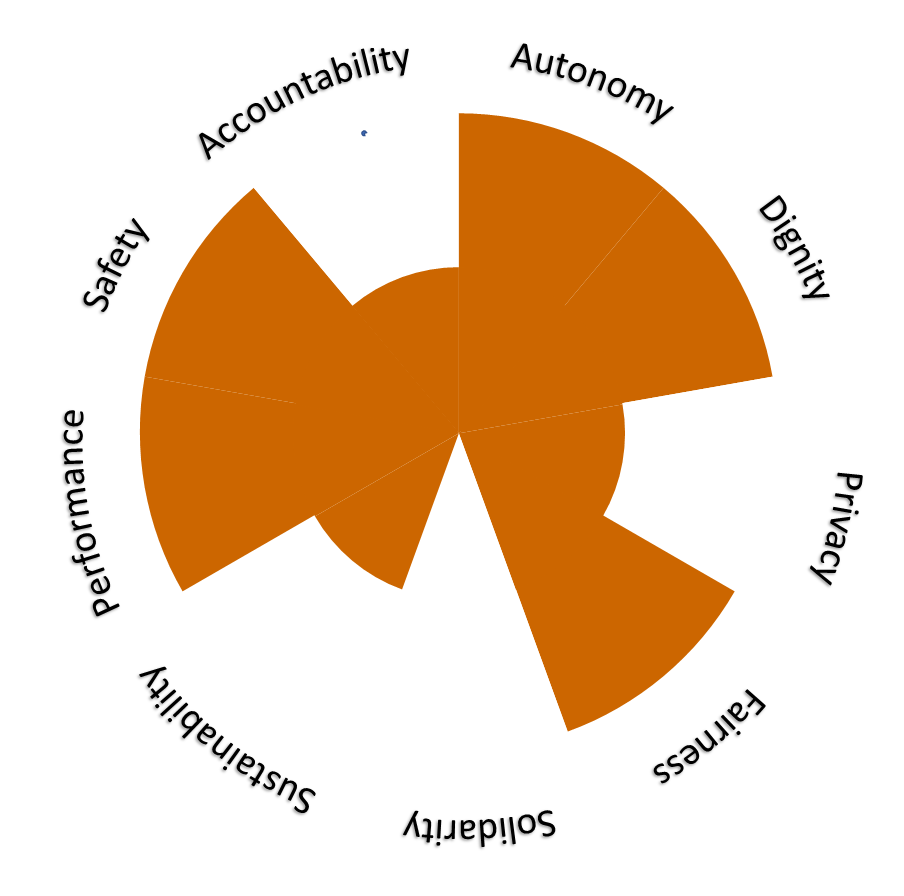

Since 2017, more than 70 AI and big data ethical principles and values guidelines have been published. Because many are ethical in origin, the contributions tend to break along the lines of academic applied ethics. Corresponding with libertarianism there are values of personal freedom. Extending from a utilitarian approach there are values of social wellbeing. Then, on the technical side, there are values focusing on trust and accountability for what an AI does.

Here is the AI Human Impact breakdown:

Domain |

Principles/Values |

Personal |

Autonomy |

|

Dignity |

|

|

Privacy |

|

Social |

Fairness |

|

Solidarity |

|

|

Sustainability |

|

Technical |

Performance |

|

Safety |

|

|

Accountability |

Each of the mainstream collections of AI ethics principles has their own way of fitting onto that trilogical foundation, but the Ethics Guidelines for Trustworthy AI sponsored by the European Commission is representative, as is the Opinion of the Data Ethics Commission of Germany. They are arranged in the figure below for comparison, along with the values grounding AI Human Impact.

AI Human Impact |

EC Guidelines Trustworthy AI |

German Data Ethics Commission |

Autonomy |

Human agency |

Self-determination |

|

Dignity |

Human dignity |

|

|

Privacy |

Privacy and |

Privacy |

|

Fairness |

Diversity, non-discrimination, fairness |

|

|

Solidarity |

Justice and Solidarity |

|

|

Sustainability |

Societal and |

Sustainability |

Democracy |

||

|

Performance |

||

|

Safety |

Technical robustness |

Security |

|

Accountability |

Accountability |

|

Transparency |

There are discrepancies, and some are superficial. The E.C. Guidelines split ‘Accountability’ and ‘Transparency,’ whereas AI Human Impact unites them into a single category. The German Data Ethics Commission joins ‘Justice’ and ‘Solidarity,’ whereas AI Human Impact splits them into ‘Fairness’ and ‘Solidarity.’

Another difference is more profound. Performance as an ethical principle occurs only in the AI Human Impact model because it is extremely important to investors: a primary reason for involvement in the evaluation of AI-intensive companies is the financial return. How well the technology performs, consequently, is critical because an AI that cannot win market share will not have financial, human, or any impact.

A note on performance

Performance as an ethics metric is also critical in this sense: machines that function well eliminate problems. No one asks about accountability or safety when nothing goes wrong: theoretically, a perfectly functioning machine renders significant regions of ethical concern immaterial. Performance – the machine working – could be construed as the highest ethical demand.

Users' Manual of Values

Autonomy

- Self-determination: Giving rules to oneself. (Between slavery of living by others’ rules, and chaos of life without rules.)

- People can escape their own past decisions. (Dopamine rushes from Instagram Likes don’t trap users in trivial pursuits.)

- People can make decisions. (Amazon displays products to provide choice, not to nudge choosing.)

- People can experiment with new decisions: users have access to opportunities and possibilities (professional, romantic, cultural, intellectual) outside those already established by their personal data.

Dignity

- Users hold intrinsic value: they are subjects/ends, not objects/means.

- People treated as individually responsible for – and made responsible for – their choices. (Freedom from patronization.)

- The AI/human distinction remains clear. (Is the voice human, or a chatbot?)

Privacy

- Control over access to one's own personal information along the entire value chain.

- Legitimate interest of the data controller in the information.

- Protections installed against accidental data exposure and cyber-attack.

- People can maintain multiple, independent personal information identities. (Work-life, family-life, romantic-life, can remain unmixed online.)

How much intimate information about myself will I expose for the right job offer, or an accurate romantic match?

Originally, health insurance enabled adventurous activities (like skiing the double black diamond run) by promising to pay the emergency room bill if things went wrong. Today, dynamic AI insurance converts personal information into consumer rewards by lowering premiums in real time for those who avoid risks like the double black diamond. What changed?

An AI chatbot mitigates depression when patients believe they are talking with a human. Should the design – natural voice, and human conversational indicators like the occasional cough – encourage that misperception?

If my tastes, fears and urges are perfectly satisfied by predictive analytics, I become a contented prisoner inside my own data set: I always get what I want, even before I realize that I want it. How – and should – AI platforms be perverted to create opportunities and destinies outside those accurately modeled for who my data says I am?

- Controlling Polarization in Personalization: An Algorithmic Framework

- Inside the Big Data Experience Machine

What’s worth more: freedom and dignity, or contentment and health?

Fairness

- Equal opportunity for individuals. (Classical approach.)

- Equal outcomes for groups, especially those historically marginalized. (Social justice approach.)

- Bias/discrimination suppressed in information gathering and application: Recognition of individual, cultural, and historical biases inhabiting apparently neutral data.

- Data bias amplification (unbalanced outcomes feeding back into processes) is mitigated. Example: an AI resume filter privileging a specific trait creates outcomes that in turn support the privileging.

Solidarity

- No one left behind: training data inclusive so that AI functions for all.

- Max/Min distribution of AI benefits: the most to those who have least.

- Stakeholder participation in AI design/implementation. (What do the other drivers think of the driverless car passing on the left?)

Sustainability

- Established metrics for socio-economic flourishing at the business/community intersection include infant mortality and healthcare, hunger and water, sewage and infrastructure, poverty and wealth, education and technology access. (17 UN Sustainable Development Goals.)

Which is primary: equal opportunity for individuals, or equal outcomes for race, gender and similar identity groups?

AI catering to individualized tastes, vulnerabilities, and urges effectively diminishes awareness of the others’ tastes, vulnerabilities and urges – users are decreasingly exposed to their music, their literature, their values and beliefs. On the social level, is it better for people to be content, or to be together?

An AI detects breast cancer from scans earlier than human doctors, but it trained on data from white women. Should the analyzing pause until data can be accumulated – and efficacy proven – for all races?

Those positioned to exploit AI technology will exchange mundane activities for creative, enriching pursuits, while others inherit joblessness and tedium. Or so it is said. Who decides what counts as creative, interesting and worthwhile versus mundane, depressing and valueless – and do they have a responsibility to uplift their counterparts?

What counts as fair? Aristotle versus Rawls.

Is equality about verbs (what you can do), or nouns (who you are, what you have)?

In the name of solidarity, how much do individuals sacrifice for the community?

Performance

- Accuracy & Efficiency

- Personalized quality, convenience, pleasure.

Safety

- Secure, resilient and empowered with fallbacks to mitigate failures.

- Human oversight: Designer or a deployment supervisor holds power to control AI actions.

Accountability

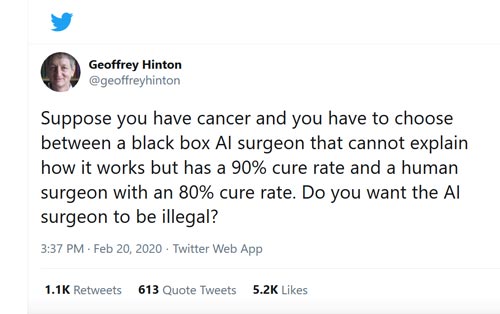

- Explainability: Ensure AI decisions can be understood and traced by human intelligence, which may require debilitating AI accuracy/efficiency. (As a condition of the possibility of producing knowledge exclusively through correlation, AI may not be explainable.) Interpretability – calculating the weights assigned to datapoints in AI processing – may substitute for unattainable explainability. (Auditors understand what data most influences outputs, even if they cannot perceive why.)

- Transparency about the limitations of explanations: If the AI is a blackbox, is the opacity clear?

- Redress for users implies an ability to contest AI decisions.

A chatbot responds to questions about history, science and the arts instantly, and so delivers civilization’s accumulated knowledge with an efficiency that withers the ability to research and to discover for ourselves (Why exercise thinking when we have easy access to everything we want to know?) Is perfect knowledge worth intellectual stagnation?

Compared to deaths per car trip today, how great a decrease would be required to switch to only driverless cars, ones prone to the occasional glitch and consequent, senseless wreck?

If an AI picks stocks, predicts satisfying career choices, or detects cancer, but only if no one can understand how the machine generates knowledge, should it be used?

What’s worth more, understanding or knowledge? (Knowing, or knowing why you know?)

Which is primary, making AI better, or knowing who to blame, and why, when it fails?

What, and how much will we risk for better accuracy and efficiency?

What counts as risk, and who takes it?

A driverless car AI system refines its algorithms by imitating driving habits of the human owner (driving distance between cars, accelerating, breaking, turning radiuses). The car later crashes. Who is to blame?

Assessment checklist

While every development and application is unique, the below list of questions orients human impact evaluators toward potential ethical problems and dilemmas surrounding AI technology.

The checklist is modified from the European Council’s Assessment List on Trustworthy Artificial Intelligence.

- Does the AI empower users to do new things?

- Does it provide opportunities that were previously unthinkable?

- Does it provide opportunities that were previously unavailable?

- Does the AI short-circuit human self-determination?

- Have measures been taken to mitigate the risk of manipulation, and to eliminate dark patterns?

- Has the risk of addiction been minimized?

- If the AI system could generate over-reliance by end-users, are procedures in place to avoid end-user over-reliance?

- Could the AI system affect human autonomy by interfering with the end-user’s decision-making process in any other unintended and undesirable way?

- Are users respected as the reason for the AI? Does the machine primarily serve the users' projects and goals, or does it employ users as tools or instruments in external projects?

- Are mechanisms established to inform users about the full range of purposes, and the limitations of the decisions generated by the AI system?

- Are the technical limitations and potential risks of the AI communicated to users, such as its level of accuracy and/ or error rates?

- Does the AI system simulate social interaction with or between end-users or subjects (chatbots, robo-lawyers and similar)?

- Could the AI system generate confusion about whether users are interacting with a human or AI system? Are end-users informed that they are interacting with an AI system?

- Is the AI system trained or developed by using or processing personal data?

- Do users maintain control over access to their personal information? Is it within their power to conceal and to reveal what is known and shared?

- Is data minimization, in particular personal data, in effect?

- Is the AI system aligned with relevant standards (e.g. ISO, IEEE) or widely adopted protocols for (daily) data management and governance?

- Are the following measures, or non-European equivalents, established?

- Data Protection Impact Assessment (DPIA).

- Designate a Data Protection Officer (DPO) and include them at an early state in the development, procurement or use phase of the AI system.

- Oversight mechanisms for data processing (including limiting access to qualified personnel, mechanisms for logging data access, and for making modifications).

- Measures to achieve privacy-by-design and default (e.g. encryption, pseudonymization, aggregation, anonymisation).

- The right to withdraw consent, the right to object, and the right to be forgotten implemented into the AI's development.

- Have privacy and data protection implications been considered for data collected, generated, or processed over the course of the AI system's life cycle?

- Are equals treated equally and unequals treated unequally by the AI? (Aristotle’s definition of fairness.)

- Have procedures been established to avoid creating or reinforcing unfair bias in the AI system for input data, as well as for the algorithm design?

- Is your statistical definition of fairness commonly used? Were other definitions of fairness considered?

- Was a quantitative analysis or metric developed to test the applied definition of fairness?

- Was the diversity and representativeness of end-users and subjects in the data considered?

- Were tests applied for specific target groups, or problematic use cases?

- Did you consult with the impacted communities about fairness definitions, for example representatives of the elderly, or persons with disabilities?

- Were publicly available technical tools that are state-of-the-art researched to improve understanding of the data, model, and performance?

- Did you assess and put in place processes to test and monitor for potential biases during the entire lifecycle of the AI system (e.g. biases due to possible limitations stemming from the composition of the used data sets (lack of diversity, non-representativeness)?

- Is there a mechanism for flagging issues related to bias, discrimination, or poor performance of the AI?

- Are clear steps and ways of communicating established for how and to whom such issues can be raised?

- Is the AI designed so that no one is left behind?

- Does the AI deliver the maximum advantage to those users who are most disadvantaged? (Does the most go to those who have least? John Rawls’ definition of Solidarity/Justice.)

- Is the AI adequate to the variety of preferences and abilities in society?

- Were mechanisms considered to include the participation of the widest possible range of stakeholders in the AI’s design and development?

- Were Universal Design principles taken into account during every step of the planning and development?

- Did you assess whether the AI system's user interface is usable by those with special needs or disabilities, or those at risk of exclusion?

- Were end-users or subjects in need for assistive technology consulted during the planning and development phase of the AI system?

- Was the impact of the AI on all potential subjects taken into account?

- Could there be groups who might be disproportionately affected by the outcomes of the AI system?

- For societies around the world, does the AI advance toward, or recede from the United Nations’ Sustainable Development Goals? For elaboration of the particular goals, see: United Nations’ Sustainable Development Goals

-

1: No poverty

2: Zero hunger

3: Good health and well-being

4: Quality education

5: Gender equality

6: Clean water and sanitation

7: Affordable and clean energy

8: Decent work and economic growth

9: Resilient industry, innovation, and infrastructure

10: Reducing inequalities

11: Sustainable cities and communities

12: Responsible consumption and production

13: Climate change

14: Life below water

15: Life on land

16: Peace and Justice Strong Institutions

17: Partnerships for the Goals

- Has a definition of what counts as performance been articulated for the AI: Accuracy? Rapidity? Efficiency? Convenience? Pleasure?

- Is there a clear and distinct performance metric?

- Does the metric correspond with human experience?

- Does the AI outperform humans? Other machines?

- Could the AI adversely affect human or societal safety in case of risks or threats such as design or technical faults, defects, outages, attacks, misuse, inappropriate or malicious use?

- Were the possible threats to the AI system identified (design faults, technical faults, environmental threats), and also the possible consequences?

- Is there a process to continuously measure and assess risks?

- Is there a proper procedure for handling the cases where the AI system yields results with a low confidence score?

- Were potential negative consequences from the AI system learning novel or unusual methods to score well on its objective function considered?

- Can the AI system's operation invalidate the data or assumptions it was trained on? Could this lead to adverse effects?

- Could the AI system cause critical, adversarial, or damaging consequences in case of low reliability or reproducibility?

- Are there verification and validation methods, and documentation (e.g. logging) to evaluate and ensure different aspects of the AI system’s reliability and reproducibility?

- Are failsafe fallback plans defined and tested to address AI system errors of whatever origin, and are governance procedures in place to trigger them?

- Have the humans in-the-loop (human intervention in every decision of the system), on-the-loop (human monitoring and potential intervention in the system’s operation), in-command (human overseeing the overall activity of the AI system, including its broader economic, societal, legal and ethical impact, and the ability to decide when and how to use the AI system in any particular situation, including the decision not to use an AI system in a particular situation, and the ability to override a decision made by an AI system) been given specific training on how to exercise oversight?

- Is there a ‘stop button’ or procedure to safely abort an operation when needed?

- How exposed is the AI system to cyber-attacks?

- Were different types of vulnerabilities and potential entry points for attacks considered, such as:

- Data poisoning (i.e. manipulation of training data).

- Model evasion (i.e. classifying the data according to the attacker's will).

- Model inversion (i.e. infer the model parameters)

- Did you red-team and/or penetration test the system?

- Is the AI system certified for cybersecurity and compliant with applicable security standards?

- Would erroneous or otherwise inaccurate output significantly affect human life?

- Can responsibility for the development, deployment, use and output of AI systems be attributed?

- Can you explain the AI's decisions to users? Are you transparent about the limitations of explanations?

- Can you trace back to which data, AI model, and/or rules were used by the AI system to make a decision or recommendation?

- Are accessible mechanisms for accountability in place to ensure contestability and/or redress when adverse or unjust impacts occur?

- Have redress by design mechanisms been put in place?

- Did you establish mechanisms that facilitate the AI system’s auditability (e.g. traceability of the development process, the sourcing of training data and the logging of the AI system’s processes, outcomes, positive and negative impact)?

- Did you consider establishing an AI ethics review board or a similar mechanism to discuss the overall accountability and ethics practices?

- Does review go beyond the development phase?

- Did you establish a process for third parties (e.g. suppliers, end-users, subjects, distributors/vendors or workers) to report potential vulnerabilities, risks, or biases in the AI system?

Report

Scoring

A three-point metric scores ethical performance indicators in AI intensive companies. A score of 2 corresponds with a positive evaluation, 1 corresponds with neutral or not material, and 0 corresponds with inadequacy. The scores convert into objective investment guidance, both individually and as a summed total.

Investors who are particularly interested in privacy, for example, or safety, may choose to highlight those metrics in their analysis of investment opportunities. Materiality is also significant as AI-intensive companies vary widely in terms of risk exposure across the values spectrum: Tesla will be evaluated in terms of safety, while TicTok/ByteDance engages privacy concerns.

Aggregate score

Investors may widen the humanist vision to include the full range of AI ethics concerns, and so focus on the overall impact score derived from a company. Because personal freedom is the orienting metric of responsible AI investing, it is double weighted in the aggregating formula.

- Personal Freedom

- Autonomy (Score 0 - 2)

- Dignity (Score 0 - 2)

- Privacy (Score 0 - 2)

- Social Wellbeing

- Fairness (Score 0 - 2)

- Solidarity (Score 0 - 2)

- Sustainability (Score 0 - 2)

- Technical Trustworthiness

- Performance (Score 0 - 2)

- Safety (Score 0 - 2)

- Accountability (Score 0 - 2)

- AI Human Impact Score

Sources for scoring

1. Self-reporting: AI Human Impact Due Diligence Questionnaire.

2. Independent ethical evaluation.

3. Data gathering from public sources.

About

AI Human Impact: Toward a Model for Ethical Investing in AI-Intensive Companies, Journal of Sustainable Finance & Investment, 2021, Version of Record.

PrePrint @ Social Science Research Network: Full text.

AI Human Impact: AI-Intensive Companies Surveyed in Human and Ethical Terms for Investment Purposes, 2020, Global AI: Boston. Video. Deck.

How to put AI ethics into practice? AI Human Impact, 2020, Vlog at AI Policy Exchange, National Law School of India University, Bangalore.

AI Human Impact: Toward a Model for Ethical Investing in AI-Intensive Companies, 2020, Podcast • AI Asia Pacific Institute.

Transforming Ethics in AI through Investment, 2020, Article at AI Pacific Institute.

AI Human Impact in panel discussion: Digital Ethics and AI Governance, 2020, Informa Tech, AI Summit NY.

AI Human Impact in panel discussion: AI in RegTech 2020, ReWork Virtual Summit.

Regulation without prohibition in panel discussion: AI in RegTech, 2020, ReWork Virtual Summit.

On AI Human Impact, 2020, AI Summit (Informa), Silicon Valley.

Why AI can never be explainable..., 2020, AI Summit (Informa), Silicon Valley.

Practical experience

Assessed a non-invasive European AI medical device that used machine learning to analyze electrical signals of the heart (EKG) to predict cardiovascular heart disease risk. The startup’s name is redacted to honor a non-disclosure agreement.

Description. Team members.

Emergency medical dispatchers fail to identify approximately 25% of cases of out of hospital cardiac arrest, thus loose the opportunity to provide the caller instructions in cardiopulmonary resuscitation. A team lead by Stig Nikolaj Blomberg (Emergency Medical Services Copenhagen, and Department of Clinical Medicine, University of Copenhagen, Denmark) examined whether a machine learning framework could recognize out-of-hospital cardiac arrest and alert the emergency medical dispatcher.

The results of a retrospective study are published here.

The results of a randomized clinical trial are published here.

We are working with Blomberg and his team to evaluate the ethical implications of using machine learning in this context.

Description. Team members.

The team of Dr. Andreas Dengel at the German Research Center for Artificial Intelligence (DFKI) used a neural network to analyze skin images for classification of three skin tumors, Melanocytic Naevi, Melanoma, and Seborrheic Keratosis. The result of their work is available here: IJCNN Interpretability (1)

We are working with Andreas Dengel and his team to evaluate the implications of using deep learning in this context.

Description. Team members.

What a Philosopher Learned at an AI Ethics Evaluation, 2020, AI Ethics Journal, 1(1)-4. Full text.

Lecturer, Doctoral Program, University of Trento, Italy. AI Ethics Today.

Philosophy Department, Pace University, New York City. CV.

Connect

James Brusseau (PhD, Philosophy) is author of books, articles, and digital media in the history of philosophy and ethics. He has taught in Europe, Mexico, and currently at Pace University near his home in New York City.

linkedin.com/in/james-brusseau

connect@aihumanimpact.fund

jbrusseau@pace.edu